Ocular Diagnosis and Interpretability Teaching Module

Lab created for Applied Machine Learning EEC174AY on Ocular Diagnosis and Model Interpretability

This page highlights Mini-Project B1, a teaching module I created for college-level machine learning students. This lab focuses on classifying ocular diseases using OCT scans, addressing data imbalance, and interpreting models through saliency mapping and clustering. All solutions and outputs shown here are generated by me as the class is actively using this lab.

Overview of Mini-Project

This project demonstrates the following skills:

- Medical Imaging Analysis: Preprocessing and augmenting OCT scans.

- Deep Learning for Classification: Training models to classify ocular conditions.

- Model Evaluation: Utilizing metrics like ROC and AUC.

- Model Interpretability: Implementing saliency mapping with Grad-CAM.

- Handling Data Imbalance: Adjusting for imbalanced datasets with weighted sampling.

- Unsupervised Learning: Performing KMeans clustering for exploratory analysis.

Explore the assignment notebook here:

Mini-Project B1: OCT Scan Analysis and Interpretability

Detailed Steps in the Lab:

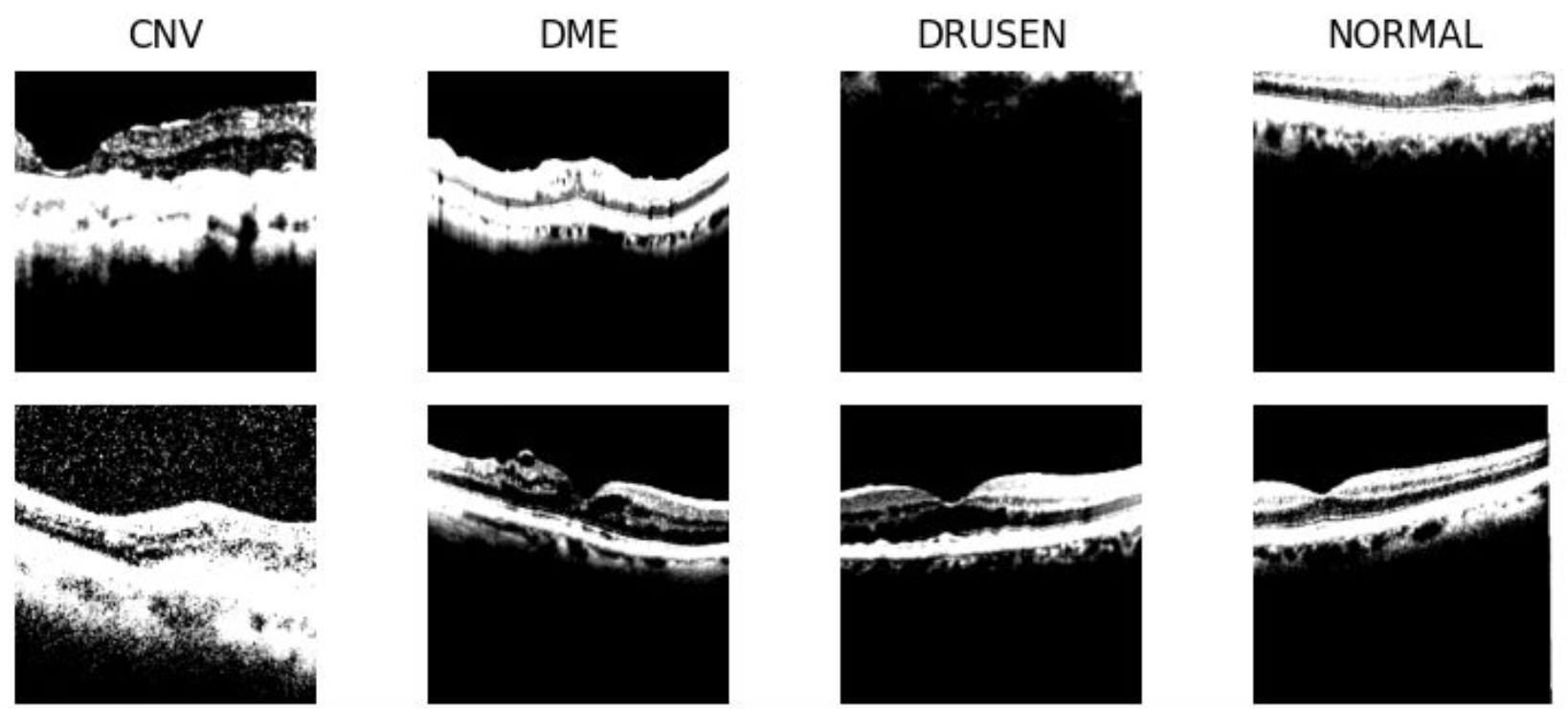

1. Dataset Setup and Training

- Load images from the OCT dataset.

- Perform data augmentations to improve model generalization.

-

Create dataset loaders and visualize class distributions.

OCT Class Visualization:

- Train a deep learning model to classify ocular conditions.

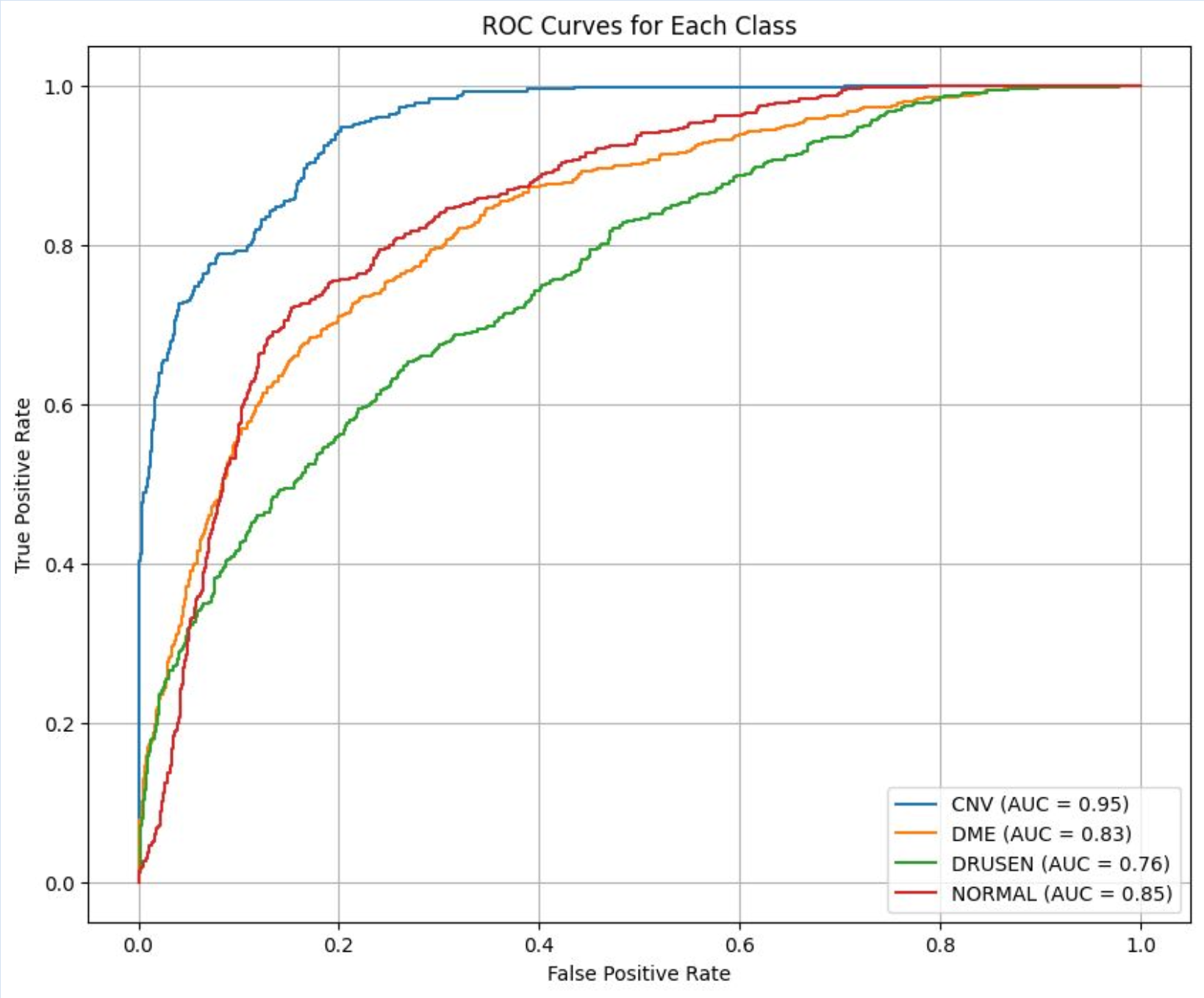

2. Model Evaluation

-

Evaluate model performance using metrics such as:

- Loss and accuracy.

- ROC and AUC curves for binary and multi-class classification.

ROC and AUC Curve Visualization:

</div>

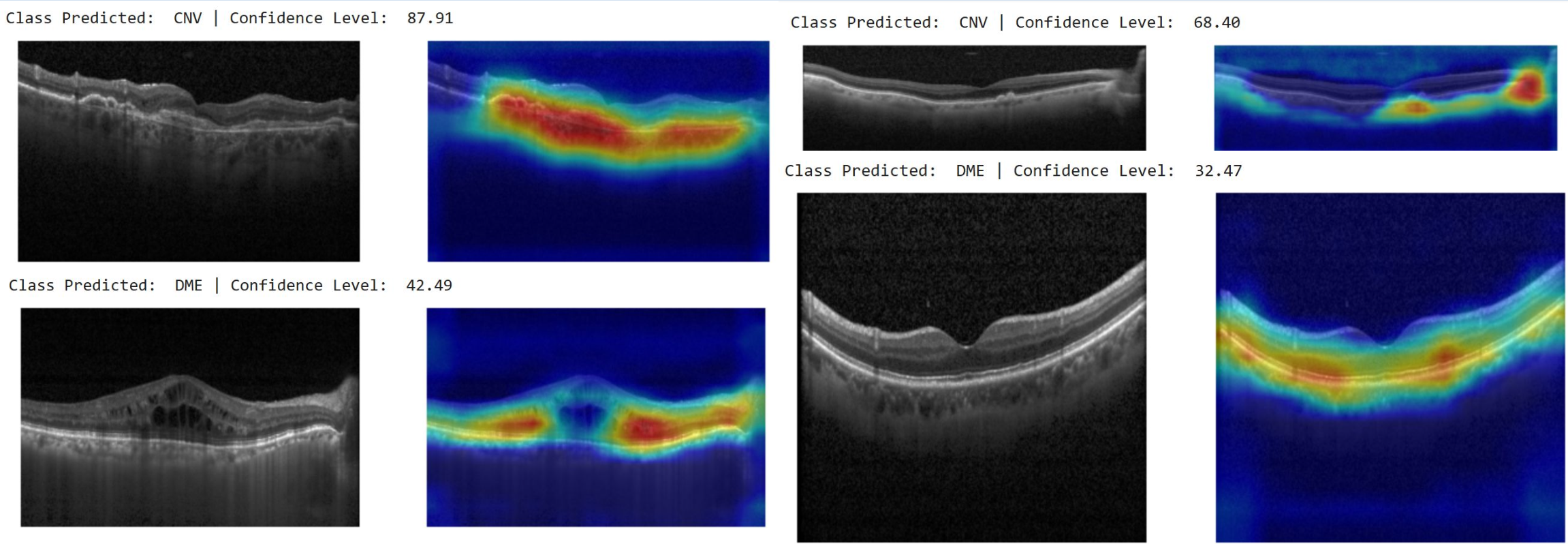

3. Model Interpretability

- Implement saliency mapping using the Grad-CAM library.

- Highlight regions of OCT scans that contribute most to the model’s predictions.

-

Generate visual explanations of model decisions.

Grad-CAM Saliency Mapping:

4. Handling Data Imbalance

- Train the model on an unbalanced version of the dataset.

- Use weighted sampling to adjust for imbalanced class distributions.

- Compare model performance before and after balancing.

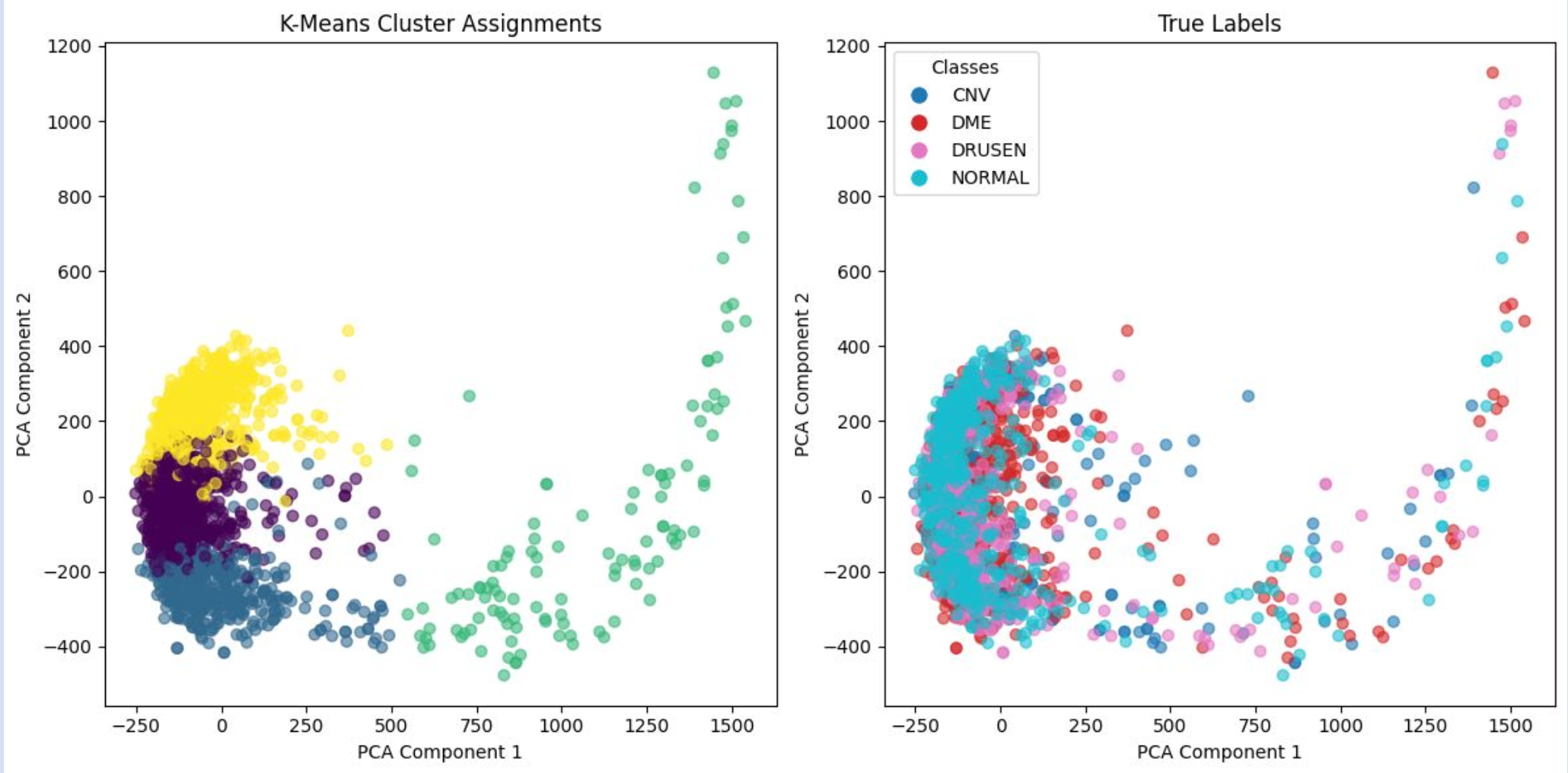

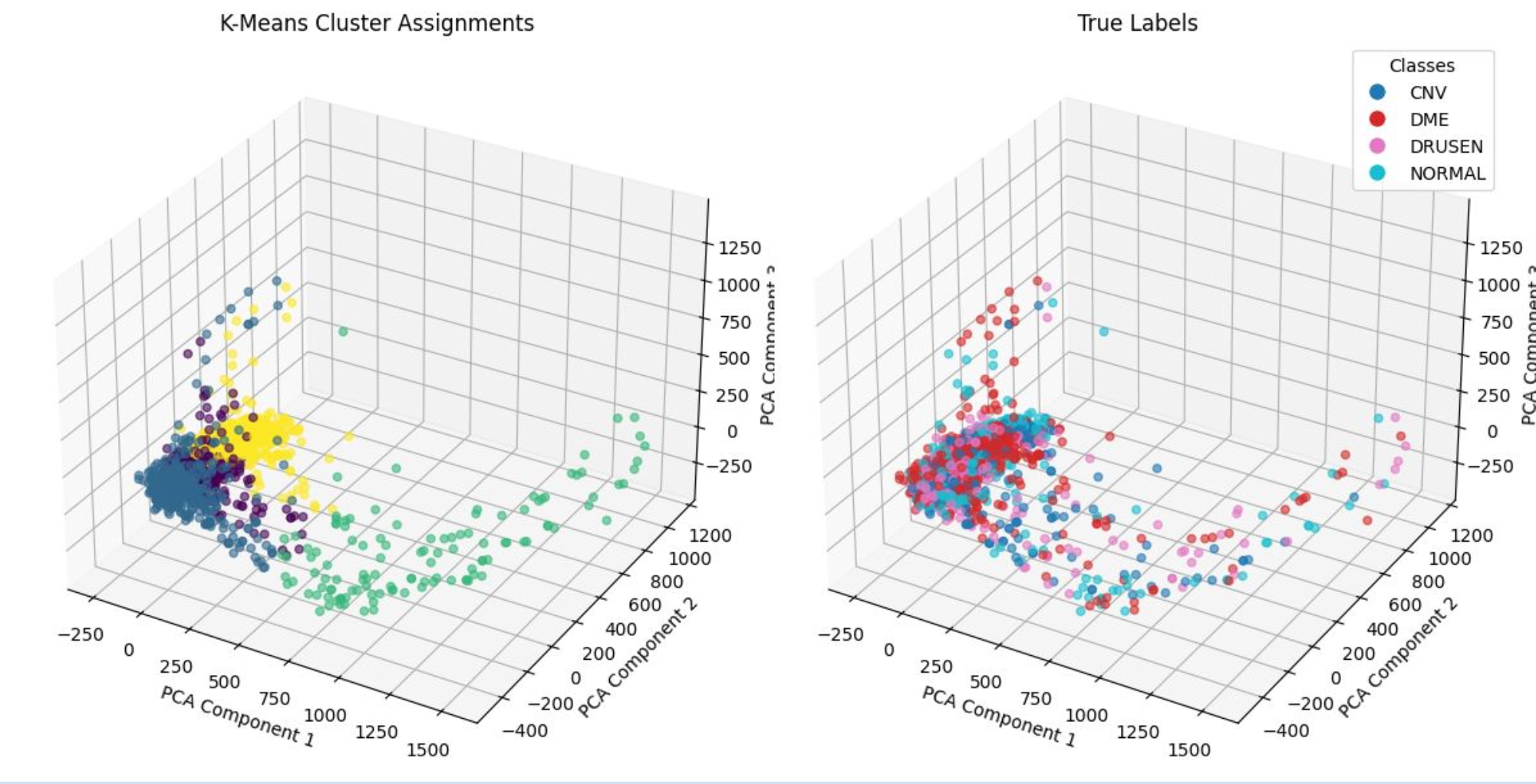

5. Unsupervised Learning

- Apply KMeans clustering to OCT scan features.

-

Visualize cluster assignments for exploratory data analysis.

KMeans Clustering on OCT Data:

Example: KMeans Clustering on MNIST Dataset:

Also apply on MNIST Dataset as verification for clustering.

Key Takeaways

This project illustrates:

- The application of deep learning and interpretability techniques to medical imaging tasks.

- Techniques for addressing data imbalance in real-world datasets.

- Hands-on experience with both supervised and unsupervised learning approaches.

- Preparation for tackling real-world challenges in medical AI research.

Explore the assignment notebook here:

Mini-Project B1: OCT Scan Analysis and Interpretability

The cover GIF for the project is reproduced from Nature Scientific Reports, under the Creative Commons Attribution 4.0 International License. Full credit to the original authors.